Slurper v2

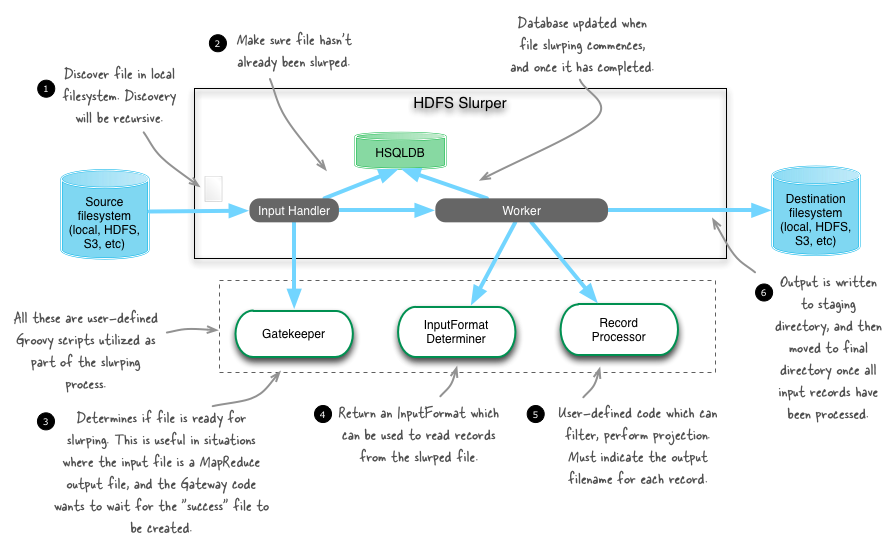

The current HDFS Slurper was created as part of writing “Hadoop in Practice”, and it just so happened that it also happened to fulfill a need that we had at work. The one-sentence description of the Slurper is that it’s a utility that copies files between Hadoop file systems. It’s particularly useful in situations where you want to automate moving files from local disk to HDFS, and vice-versa.

While it has worked well for us, with the addition of a few choice features it could be even more useful:

- Filter and projection, to remove or reduce data from input files

- Write to multiple output files from a single input file

- Keep source files intact

As such I have come up with a high-level architecture for what v2 may look like (subject to change of course).

About the author

Alex Holmes works on tough big-data problems. He is a software engineer, author, speaker, and blogger specializing in large-scale Hadoop projects. He is the author of Hadoop in Practice, a book published by Manning Publications. He has presented multiple times at JavaOne, and is a JavaOne Rock Star.

If you want to see what Alex is up to you can check out his work on GitHub, or follow him on Twitter or Google+.

RECENT BLOG POSTS

-

Configuring memory for MapReduce running on YARN

This post examines the various memory configuration settings for your MapReduce job.

-

Big data anti-patterns presentation

Details on the presentation I have at JavaOne in 2015 on big data antipatterns.

-

Understanding how Parquet integrates with Avro, Thrift and Protocol Buffers

Parquet offers integration with a number of object models, and this post shows how Parquet supports various object models.

-

Using Oozie 4.4.0 with Hadoop 2.2

Patching Oozie's build so that you can create a package targetting Hadoop 2.2.0.

-

Hadoop in Practice, Second Edition

A sneak peek at what's coming in the second edition of my book.