Executing variables that contain shell operators

I touched a little on pipes in a previous post. Here’s a quick example of an echo utility which outputs two lines, and a pipe operator which redirects that output to a grep utility which performs a simple filter to only include lines that contain the word “cat”:

shell$ echo -e 'the cat \n sat on the mat' | grep cat

the catCool - since that worked, what do you think will happen if you do the following?

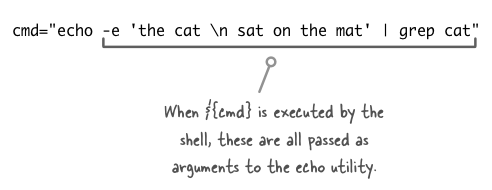

shell$ cmd="echo -e 'the cat \n sat on the mat' | grep cat"

shell$ ${cmd}In the above example we’re simply assigning the original utility to a shell variable, and then executing it. So why, then, would the output be this?

shell$ ${cmd}

'the cat

sat on the mat' | grep catThis is something that has bitten me in the past when I write shell scripts. What’s happening here is that the shell executes the contents of variable cmd as a single command, which means that everything after echo are treated as arguments to the echo utility, including the pipe.

What we actually need to happen is to have the entire contents of cmd evaluated by the shell so that the shell can create the pipeline between the two utilities. This is where the utility eval comes into play - eval tells the shell to concatenate the arguments and have them executed by the shell.

shell$ eval ${cmd}

the catThe morale of this story is that if you want to execute a variable that includes any shell constructs (such as the pipe in our example) - then make sure you eval. Examples of shell constructs include redirections (i.e. echo "the cat" > file1.txt), shell conditionals, loops and functions.

About the author

Alex Holmes works on tough big-data problems. He is a software engineer, author, speaker, and blogger specializing in large-scale Hadoop projects. He is the author of Hadoop in Practice, a book published by Manning Publications. He has presented multiple times at JavaOne, and is a JavaOne Rock Star.

If you want to see what Alex is up to you can check out his work on GitHub, or follow him on Twitter or Google+.

RECENT BLOG POSTS

-

Configuring memory for MapReduce running on YARN

This post examines the various memory configuration settings for your MapReduce job.

-

Big data anti-patterns presentation

Details on the presentation I have at JavaOne in 2015 on big data antipatterns.

-

Understanding how Parquet integrates with Avro, Thrift and Protocol Buffers

Parquet offers integration with a number of object models, and this post shows how Parquet supports various object models.

-

Using Oozie 4.4.0 with Hadoop 2.2

Patching Oozie's build so that you can create a package targetting Hadoop 2.2.0.

-

Hadoop in Practice, Second Edition

A sneak peek at what's coming in the second edition of my book.